5G-EPICENTRE: AR and AI wearable electronics for PPDR

Youbiquo (YBQ) is the manufacturer of the “Talens” Smart Glasses, a wearable computer equipped with AR and AI features. Having achieved several successes in the manufacturing industry, the Smart Glasses come equipped with Smart Personal Assistant and Video Conference software, which YBQ plans to integrate into the rescue and operations environment. In this Use Case (UC7), YBQ aims to experiment with its Talens Holo Smart Glasses in 5G network conditions, targeting a case of interest to the PPDR domain, which is described below.

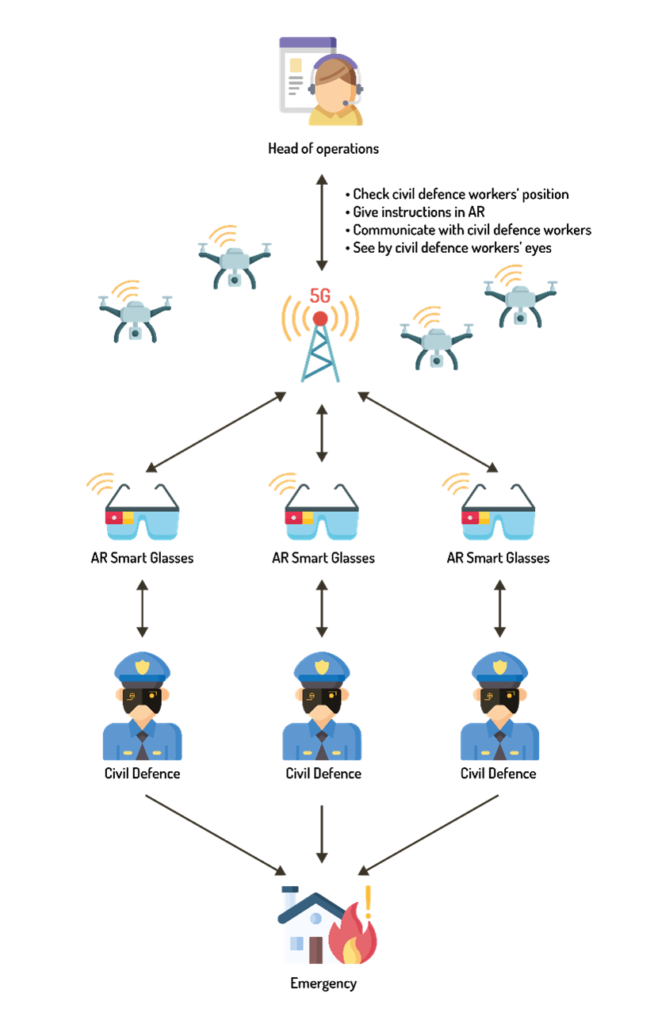

As shown in the figure below, instance segmentation and edge detection will be used to overlay useful information directly on top of the real world through the optical see-through display worn by civil defence workers (on-field operators), who patrol or operate in a designated area. For the realisation of this scenario, low latency edge device interconnection is a requirement so that ML processes dealing with costly, AI-driven semantic segmentation procedures can run efficiently in order to provide real-time view annotation. The overall situational awareness mechanism for the officers will be complemented by data interchanged between the operation site and the Command&Control Center (CCC). Finally, if drones are available on site, Machine Learning (ML) elaborated info coming from their cameras can be shown on the AR layer such as the number of people injured, fires placement, other public forces on the field and so on.

UC7 real-time semantic segmentation

Experiment phases or deployment scenarios

A set of civil defence workers wearing Smart Glasses are able to see AR information on the disaster scene. The AR layer is composed of information locally elaborated into the wearable processing unit together with information remotely elaborated by ML algorithms in the CCC. The Smart Glasses worn by the operator send an audio/video stream to the CCC for ML situational awareness evaluation. In the CCC a set of views can be developed in order to analyse the different information coming from the disaster field, segmented by its meaning, i.e., a heat map to highlight the movements of operators onto the field. All the operators wearing the Smart Glasses can start an audio/video call with the remote CCC.

In this UC, it is possible to use patrolling drones to effectively support partners working on their deployment and communication.

The drones could be sent to the disaster field prior to the arrival of civil defence workers. Drone cameras can map the scene, their images can be shared with the CCC and this info can be useful for the people wearing Smart Glasses sent to the field before they arrive.

Moreover, during the action, users wearing the Smart Glasses can receive elaborated real-time info from the video flow acquired by drones. Using the fast 5G connection, the drones’ video flow can be sent from the drones to the CCC, can be elaborated with ML algorithms and then sent to the Smart Glasses to have a complete awareness of the situation.

From a technological point of view, a mobile application has to be designed and developed for the Android platform (the OS of the Smart Glasses) and for the management of the drones’ communication. In the AR field, there are different AR engines available; the first tests done using the Unity Framework are promising.